Everyone loves having their own domain name. For around $10 per year you can have a short, memorable string that points to your content and contributes to a large part of its identity. I can’t speak for everyone, but personally I would rather go to finalbastion.com than 143.198.60.42.

This system works out great until the domain owner neglects to renew it. When this happens the content is often still technically on the internet but it becomes much harder to reach. When people host their site on Blogger or WordPress with a custom domain the hosting provider usually redirects the native domain (aroundthespiral.wordpress.com) to the custom one (aroundthespiral.com). So when the custom domain expires and the native domain still points to the expired one, problems arise. This is exactly what happened to edwardlifegem.com back in January and shows no signs of being resolved. When arcanumsarchives.com expired that’s what expedited the process.

Gathering Data

Before I did anything else I first made a new table in MySQL to hold this new information. I then populated the table with each p101 domain and normal domain to fill half the table.

CREATE TABLE custom_domains(new_domain VARCHAR(255) PRIMARY KEY NOT NULL, domain VARCHAR(255), live VARCHAR(1), reg_date DATE, exp_date DATE);

INSERT INTO custom_domains(new_domain, domain) SELECT new_domain, url FROM sites WHERE url NOT LIKE '%blogspot.com' AND url NOT LIKE '%wordpress.com' AND NOT LIKE '%tumblr.com' AND ...

GRANT SELECT, UPDATE ON db_name.custom_domains TO 'py-crawler'@'localhost';

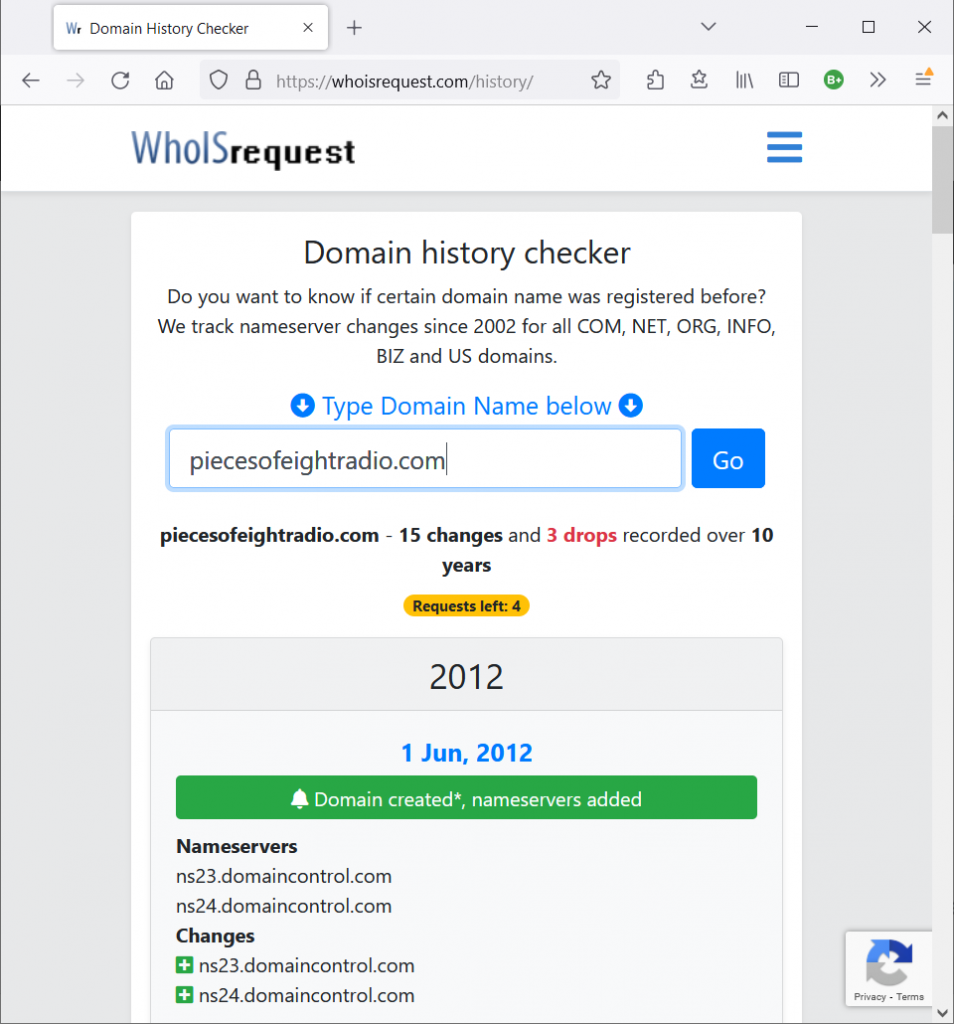

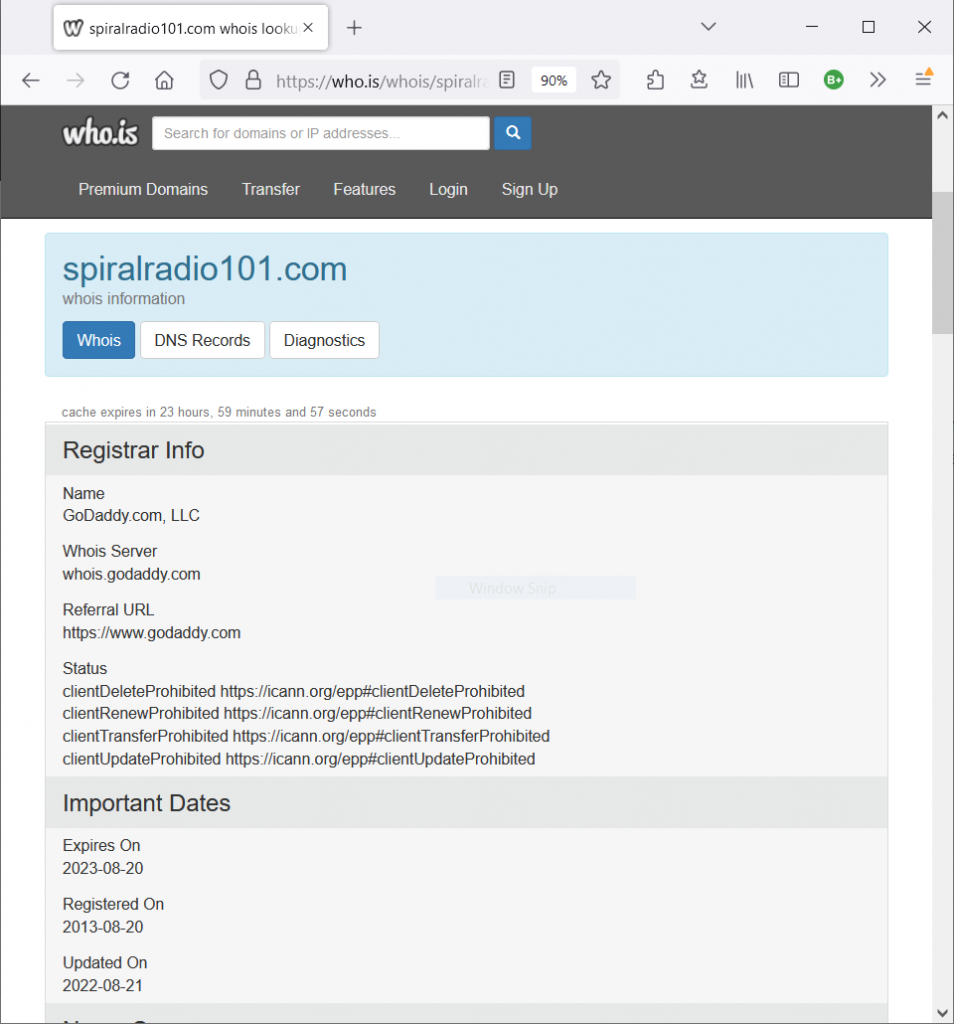

Depending on whether the website for the domain is still up or not determined where I went for information. For live sites I used the website who.is and for those which are down or which host unrelated content I went to whoisrequest.com/history. A minor inconvenience is the fact that you can only make five requests before the website IP bans you for 24 hours. I was able to get around this by using a free browser-based VPN where every five queries I would have to change the country

UPDATE custom_domains SET live='y', reg_date='2013-08-20', exp_date='2023-08-20' WHERE new_domain='spiralradio.p101';

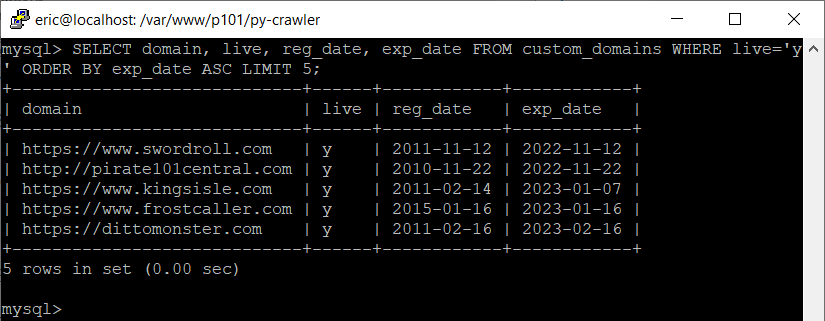

Filling the table wasn’t a difficult process, it was just kind of tedious. After going through all of the sites with custom domains I found two which are most at risk: swordroll.com and pirate101central.com which both expire in less than a month.

This is great, problem solved, now I know which websites to verify backups for. Except, that assumes I will overcome laziness and run this script manually in time to find the next expiring domain.

Automating the Process

Yup, you guessed it, time to take human laziness out of the equation by automatically running this script at regular intervals. A lot of this script I copied over from my activity-checking automated script so I’m only going to explain the bits which are new.

The first new thing in this script is the domain-checking aspect. To do this I installed a Python package called whois using pip.

sudo pip3 install python-whois

Once this package is installed it can be used to check a domain, d, from the table I setup previously. If the package is able to find information on the domain then a dictionary is stored in w. Otherwise, write to the same error output file as before. The continue at the end tells the loop to skip all of the following instructions in the current loop iteration and move on to the next domain.

try:

w = whois.whois(d)

except whois.parser.PywhoisError as e:

if error_written == False:

error_log.write("Scrape: "+str(now_t)+"\n")

error_written = True

error_log.write("\t "+e)

continue

Resolvable domains return the same information that was displayed on the whois websites that I used earlier: registration date, expiration date, update date, and name servers addresses. Each of the above fields can have multiple (but closely related) entries for some reason, so the next step I took the earliest of all the dates and the first name server address.

exp = w.expiration_date

if isinstance(exp, list):

exp = exp[1].date()

else:

exp = exp.date()

The first thing I check each domain for is whether it has been updated since the last scrape. I don’t know how terribly important this is information is, but why not grab it.

if upd > now - datetime.timedelta(days=7):

if renew_written == False:

renew_log.write("Scrape: "+str(now_t)+"\n")

renew_written = True

renew_log.write("\t"+d+" updated on "+str(upd)+"\n")

The next check is to see if the name servers are domains which are obviously used for parking. When domains are parked that indicates that they are under new ownership like 99% of the time. I couldn’t think of a better way to do this than to check if the strings “park” or “expire” are in the name servers. If that is the case then I want to set live to be n to reflect the fact that the site is no longer available under its original purpose.

if "park" in ns or "expire" in ns:

mycursor = myuser.cursor()

mycursor.execute("UPDATE custom_domains SET live='n' WHERE new_domain='"+i[0]+"';")

myuser.commit()

mycursor.close()

if renew_written == False:

renew_log.write("Scrape: "+str(now_t)+"\n")

renew_written = True

renew_log.write("\t"+d+" parked")

If the domain isn’t parked then the next step is see if the domain was renewed. This happens when the expiration date found through whois is later than the current date in the table. This is good; the table gets updated to reflect the renewal and the site survives in its native location for another year!

elif exp > i[2]:

if renew_written == False:

renew_log.write("Scrape: "+str(now_t)+"\n")

renew_written = True

renew_log.write("\t"+d+" next renewal: "+str(exp)+"\n")

mycursor = myuser.cursor()

mycursor.execute("UPDATE custom_domains SET exp_date='"+str(exp)+"' WHERE new_domain='"+i[0]+"';")

myuser.commit()

mycursor.close()

The last check I could think to do is if the domain in question is going to expire within 30 days. This was the main reason for the project in the first place. I wish there was a better way to notify myself when an expiration is imminent but I don’t really want to (or know how to) setup a mail server just yet.

elif exp < now + datetime.timedelta(days=30):

if renew_written == False:

renew_log.write("Scrape: "+str(now_t)+"\n")

renew_written = True

renew_log.write("\t"+d+" expiring on "+str(exp)+"\n")

The final step is to add this script to the crontab so that it executes on a regular schedule. I opted to do this on a weekly basis since domain expirations happen on a set schedule and you know when it will happen far enough in advance. The syntax is a little weird but I followed this guide: Linux crontab command help.

sudo crontab -e

00 03 * * Mon python3 /var/www/p101/nic/scripts/domain_exp_checker.py

The page with the table itself is hosted here: Expiring Domains.