Back in May I archived the Pirate101 Subreddit in the midst of Reddit’s API shutdown. This was necessary to grab content created up to that point, but I would need to periodically repeat the process manually in order to grab new content. There is no way I would remember to do this on a regular basis so I set out to automate the process. Because Push script

Improving bdfr

One caveat of using the bdfr tool is that it takes a long time to run to completion, in the order of hours. I could let it do this in the background, but if I only needed the most recent month’s worth of posts it would be a colossal waste of time and resources. With this in mind I set out to modify bdfr to be more efficient.

I have never modified an installed package before so I was nervous at first that I would break something. Thankfully because bdfr is an open-source pip package it is written in Python. I can simply open up the constituent files and change things as I see fit. In order to see where the package is installed on your computer, use this command:

pip show bdfr

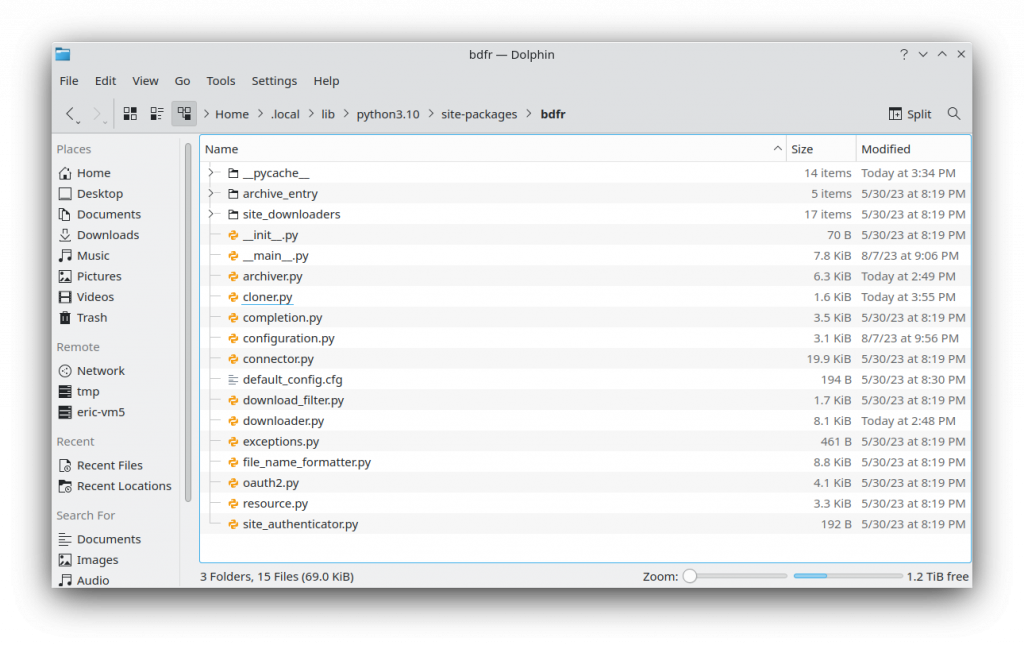

This prints a lot of information about the package and then at the very bottom is the location. In my case, it is located in /home/eric/.local/lib/python3.10/site-packages/bdfr. There are several constituent files that make up the package and will need to be modified.

Adding a CLI Argument

The first thing I need to do is add a command line argument for this functionality I want to add. There is one CLI argument relating to the time sorting of posts but it cannot be used to selectively download posts. For this reason I am adding a “since” argument. This is done in __main__.py and configuration.py.

# Excerpt of __main__.py, lines 47-50 of modified bdfr v2.6.2

click.option("-u", "--user", type=str, multiple=True, default=None),

click.option("-v", "--verbose", default=None, count=True),

click.option("--since", is_flag=False, default=None)

]

Click is a Python package which makes handling command line arguments much easier. The three above are for setting a Reddit user to download, toggling verbosity, and my new added “since” argument, respectively. Each argument has relevant required information such as short form (“-u”), long form (“–user”), type, etc. All of them together form a list which is stored for use in the other files.

from datetime import datetime

# Excerpt of configuration.py lines 56-64 of modified bdfr v2.6.2

self.verbose: int = 0

# Archiver-specific options

self.all_comments = False

self.format = "json"

self.comment_context: bool = False

# Added by me

self.since: datetime = None

While __main__.py setup the command line arguments, configuration.py actually handles the underlying variables. I imported Python’s datetime package for its datatype that makes date arithmetic easier. Then further down the file I created the self.since object to contain the argument data. This is just an initialization without assigning value, which the program will do automatically.

Checking for Submission Date

The final modification comes in cloner.py, the file which delegates to downloader.py and archiver.py to download submissions. As before the datetime package must be imported for this to work. Under the download() method there is a set of nested for loops to iterate through each desired Subreddit or user. I added an if statement to check whether each submission fell within the right timeframe.

from datetime import datetime

# Excerpt of cloner.py lines 22-34 of modified bdfr v2.6.2

def download(self):

for generator in self.reddit_lists:

try:

for submission in generator:

try:

if datetime.strptime(self.args.since, "%Y%m%d") > datetime.fromtimestamp(submission.created_utc):

logger.info(f"Submission {submission.id} filtered due to date.")

if self.args.sort == "new":

logger.info("Sorted by new. List complete")

break

continue

self._download_submission(submission)

self.write_entry(submission)

If the provided argument is more recent than the submission then a short message will be printed to the terminal and the submission will be skipped. This functionality by itself is fairly general, but I added a second statement to check if the current list was sorted by new. When this is the case every following post will also fall outside the desired window so it is best to simply stop the script. This is handled by the break statement. In the event that more than one Subreddit was provided to scrape, the code would then move on to the next.

Wrapping into a Script

With the necessary modifications to bdfr it can now be included into a script to run regularly. I was planning to tackle this in the bash scripting language but it became more complex than I was prepared for. The entirety of the wrapping script is below.

import os

from datetime import date, datetime, timedelta

try:

cwd = os.getcwd()

with open(os.path.join(cwd,"last_run"),"r") as f:

last_run = f.read().strip()

#last_run = "20230820"

lr_date = date.fromisoformat(last_run[:4]+"-"+last_run[4:6]+"-"+last_run[6:])

comp = date.today() - lr_date

scrape_from = lr_date - timedelta(days=1)

if comp.days > 30:

os.system("bdfr clone . -s Pirate101 -S new --log bdfr_log --since "+scrape_from.strftime("%Y%m%d"))

count_stream = os.popen("find Pirate101/*.json -maxdepth 1 -mtime -3 | wc -l")

downloaded = count_stream.read()

count_stream.close()

errors = []

with open(os.path.join(cwd,"bdfr_log"),"r") as f:

for i in f:

if "ERROR" in i and "429 HTTP" in i:

sub = i.find("Submission")

post_id = i[sub+11:sub+18]

errors.append((post_id,"praw"))

elif "ERROR" in i:

errors.append((i))

with open(os.path.join(cwd,"log"),"a+") as f:

f.write(datetime.today().strftime("%Y-%m-%d %X %Z ")+downloaded)

for i in errors:

if len(i) == 2:

f.write("\t429 HTTP: "+i[0])

else:

f.write("\t"+i[0])

with open(os.path.join(cwd,"last_run"),"w+") as f:

f.write(date.today().strftime("%Y%m%d"))

except Exception as e:

print(e)

with open(os.path.join(cwd,"log"),"a+") as f:

f.write(datetime.today().strftime("%Y-%m-%d %X %Z")+"\n")

f.write("\t"+str(e)+"\n")

At the beginning are the two import statements which include the libraries used in this code. The entirety of the code thereafter is wrapped within a try-except structure to handle things gracefully and record the output when an error happens. The first thing to do is to get the current directory the script is running in for use in opening and modifying log files. Moving forward, the date the script was last run is extracted from the similarly named file. That date is then compared to today and if there have been 30 days in between the script continues.

My specially crafted bdfr statement is executed from my computer’s shell terminal via os.system(). The first few arguments are fairly standard and identical to what I used for the initial scrape back in May. The first new argument is --log which sets a custom location for bdfr’s log file of this run. It is normally stored in ~/.config/bdfr/log_output.txt but I wanted in the local directory. The final argument is my custom --since which then accepts the earliest date to grab from. This process executes to completion which can take several minutes up to a few hours on particularly long scrapes.

After the actual scraping finishes I have another shell command execute which returns the number of downloaded files. This is not vitally important data but I was curious and figured why not implement it. At the end I check bdfr‘s log file to look for posts which failed to download. They are stored in a list and then written to my custom log file. Finally I the script updates the last_run file to show the current date.

Running at Boot

For previous automated scripts I have used crontab, Linux’s built-in scheduler. This works really well for servers which are ideally on 24/7. I could simply set the script to run once a month and call it a day. But because I am running this script locally on my desktop there is no guarantee that it will be on when the script is supposed to run. For this reason I handled scheduling directly in the Python wrapper and am instead running the script when the computer boots. The easiest way to do this is by setting it in /etc/rc.local.

sudo vi /etc/rc.local

There should already be a line at the top called the “shebang”, a line starting with a #! which tells the computer how to run this executable file. Simply add a new line containing the script to run, in this case my Python wrapper. Each time you need to add another command to run on boot just add it to the next line.

#!/bin/sh python /home/eric/p101/reddit/bdfr/combiner.py

With that file saved it is time to wait until 30 days since the last run to see if it works!