Last month’s post was about the background of Google+ and a preliminary attempt to scrape the limited data that was gathered before the shutdown. I wrote a script to read through all of the .cdx.idx files but this did not yield any results. So I decided to bite the bullet and start scraping the full 1.65 PB data set. Again, I have three months left at my university and I would like to make use of this bandwidth while I can.

The last paragraph remains the way I initially wrote it about a month ago. Toward the end of January I had some time on my hands and started writing a script to scrape the full 1.65 PB, having mentally prepared myself for what would probably be a year’s-long endeavor at best. I upgraded my script dozens of times to improve efficiency and reliability, and even created a complex client-server network so that I could add as many scraping computers as I could get my hands on. I think I’ll create a separate post about that so that my first effort was not in vain.

After a full month of hacking at this problem I figured out a way to do things much more efficiently. Instead of having to download and unzip 33,033 files weighing in at a hefty 50 GB each, I only needed to download the first 4kB of each file. This amounts to a whopping 99.999992% savings in data: 1,650,000 GB to 0.132 GB. Efficiency increases like this are what give credence to the “work smarter, not harder” adage.

Once uncompressed, each 50 GB file can be seen as containing many smaller files, known as a WebArchive file. There is a header containing information about the scrape that obtained the data in the file. The first such fields are listed below.

WARC/1.0 WARC-Type: warcinfo Content-Type: application/warc-fields WARC-Date: 2019-03-22T12:15:47Z WARC-Record-ID: <urn:uuid:8b8d4006-c75f-493b-9fed-044a1074a3b9> WARC-Filename: googleplus-c08e2178203f29db6193e9f74cb8ea6f5b2cea80-20190322-121547.warc.gz WARC-Block-Digest: sha1:N4BCDTQDIZF4FK224A5S6HSFL7XCZWTQ Content-Length: 15797 software: Wget/1.14.lua.20160530-955376b (linux-gnu) format: WARC File Format 1.0 conformsTo: http://bibnum.bnf.fr/WARC/WARC_ISO_28500_version1_latestdraft.pdf robots: off

But the most interesting field is the list of arguments that comprised this specific wget scrape.

wget-arguments: "-U" "Mozilla/5.0 (Windows NT 10.0; rv:47.0) Gecko/20100101 Firefox/47.0" "-nv" "--no-cookies" "--lua-script" "googleplus.lua" "-o" "/data/data/projects/googleplus-e178083/data/1553256947e9ba3e5c04488449-949/c08e2178203f29db6193e9f74cb8ea6f5b2cea80/wget.log" "--no-check-certificate" "--output-document" "/data/data/projects/googleplus-e178083/data/1553256947e9ba3e5c04488449-949/c08e2178203f29db6193e9f74cb8ea6f5b2cea80/wget.tmp" "--truncate-output" "-e" "robots=off" "--rotate-dns" "--recursive" "--level=inf" "--no-parent" "--page-requisites" "--timeout" "30" "--tries" "inf" "--domains" "google.com" "--span-hosts" "--waitretry" "30" "--warc-file" "/data/data/projects/googleplus-e178083/data/1553256947e9ba3e5c04488449-949/c08e2178203f29db6193e9f74cb8ea6f5b2cea80/googleplus-c08e2178203f29db6193e9f74cb8ea6f5b2cea80-20190322-121547" "--warc-header" "operator: Archive Team" "--warc-header" "googleplus-dld-script-version: 20190308.14" "--warc-header" "googleplus-item: users:smap/19249/wg" "--warc-header" "googleplus-user: 104474511499273456549" "https://plus.google.com/104474511499273456549" "--warc-header" "googleplus-user: 107002576361659678344" "https://plus.google.com/107002576361659678344" "--warc-header" "googleplus-user: 106524555505828898779" "https://plus.google.com/106524555505828898779" "--warc-header" "googleplus-user: 114629123457088464404"

All of the stuff I bolded in the section above are the actual links being downloaded by the scrape. I pasted only up to the first three links but this particular scrape looked at 99.

The main problem with this approach is that most tools try their best to download whole files and not just parts. The only way I could get wget to download a partial file was to use Ctrl+C to cancel the operation. This worked for testing but I would not be able to send this same signal in code (that I know of). After some searching I found that the curl utility is able to do what I want. Using the -r flag I can specify how many bytes to download. After further testing I figured out that I also needed to include -L in order to follow redirects and --output to save the data as a specified filename.

curl https://archive.org/download/archiveteam_googleplus_20190322122657_bf885c4c/googleplus_20190322122657_bf885c4c.megawarc.warc.gz -vL --output curl_attempt -r 0-4000

With the header part of the file now downloaded I now needed to unzip it. When I used gunzip on this file I ran into some weird behavior. Because I stopped downloading the file partway through, gunzip complained that it was not expecting the end of the file when it happened and refused to save the output. I found a workaround by piping the unzipped stream into a new file via the terminal. Without redirecting the stream the unzipped data prints directly to the terminal. It’s kind of a hacky solution but it works for my purposes.

gunzip curl_attempt.gz -c > curl_attempt

With a sample file downloaded and unzipped, it was time to automate the process. I adapted the same python script I used in the last post to download .cdx.gz files. For each id in the collection list file I downloaded the file using the curl command unzipped with gunzip as explained above. When reading through each file I again looked for the Pirate101 Google+ id: 107874705249222268208.

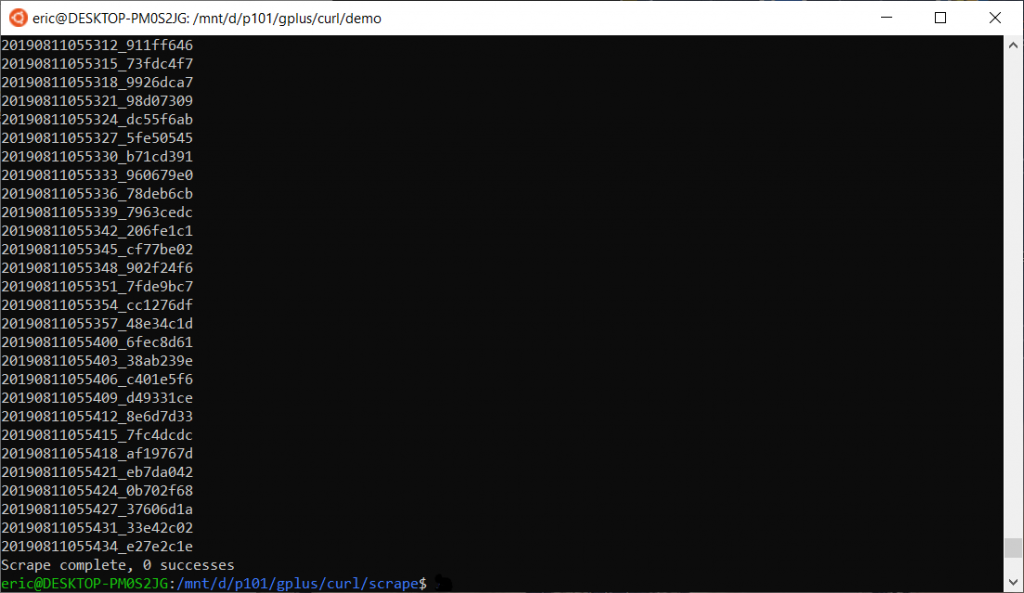

And again, my search ended unsuccessfully. The script stopped unexpectedly a few times, complaining that a file did not exist, but I included a section in the code to save the most recent id before stopping. Simply starting the script back up continued where it had left off and the problem wouldn’t show itself for another few hours. In total the scrape took less than a day to look at the header information for all 33,033 files, without finding a single page under the Pirate101 Google+ account.

I didn’t expect to find a ton of hidden P101 content; I was mainly hoping to locate where/when the single saved webpage from the ArchiveTeam scrape was to see if I could glean any additional clues. My guess is that the reason only one capture of the P101 profile was made is that it was linked from another G+ profile. I checked several times and made sure that the script was able to correctly identify the profile IDs, so the lack of found pages is not a result of bad code.

Regardless, more and more evidence is pointing to a reality where P101 data was not saved in the Google+ archiving effort. I do not think it is worth it to go through with my initially planned 1.65 PB scrape. This would take years and likely destroy any harddrives or SSDs that would be used. I would still like to take what little data is left on the Wayback Machine and mirror it on its own gplus.p101 domain, but the overwhelming majority is permanently lost.